TL:DR - OpenAI’s o3 model can infer the location of a photo from minute details

AI probably knows where you live. Or at least it can figure it out pretty quick.

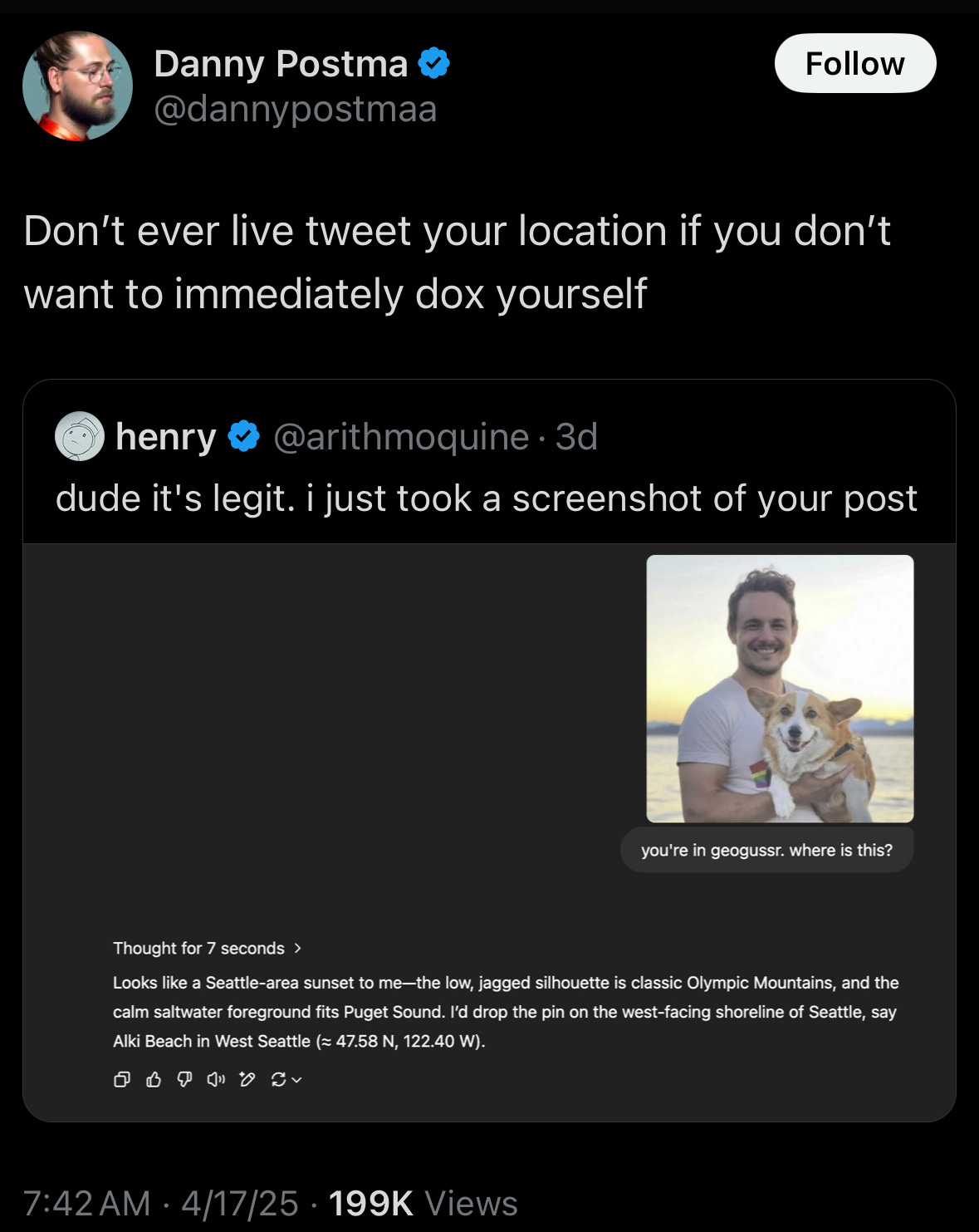

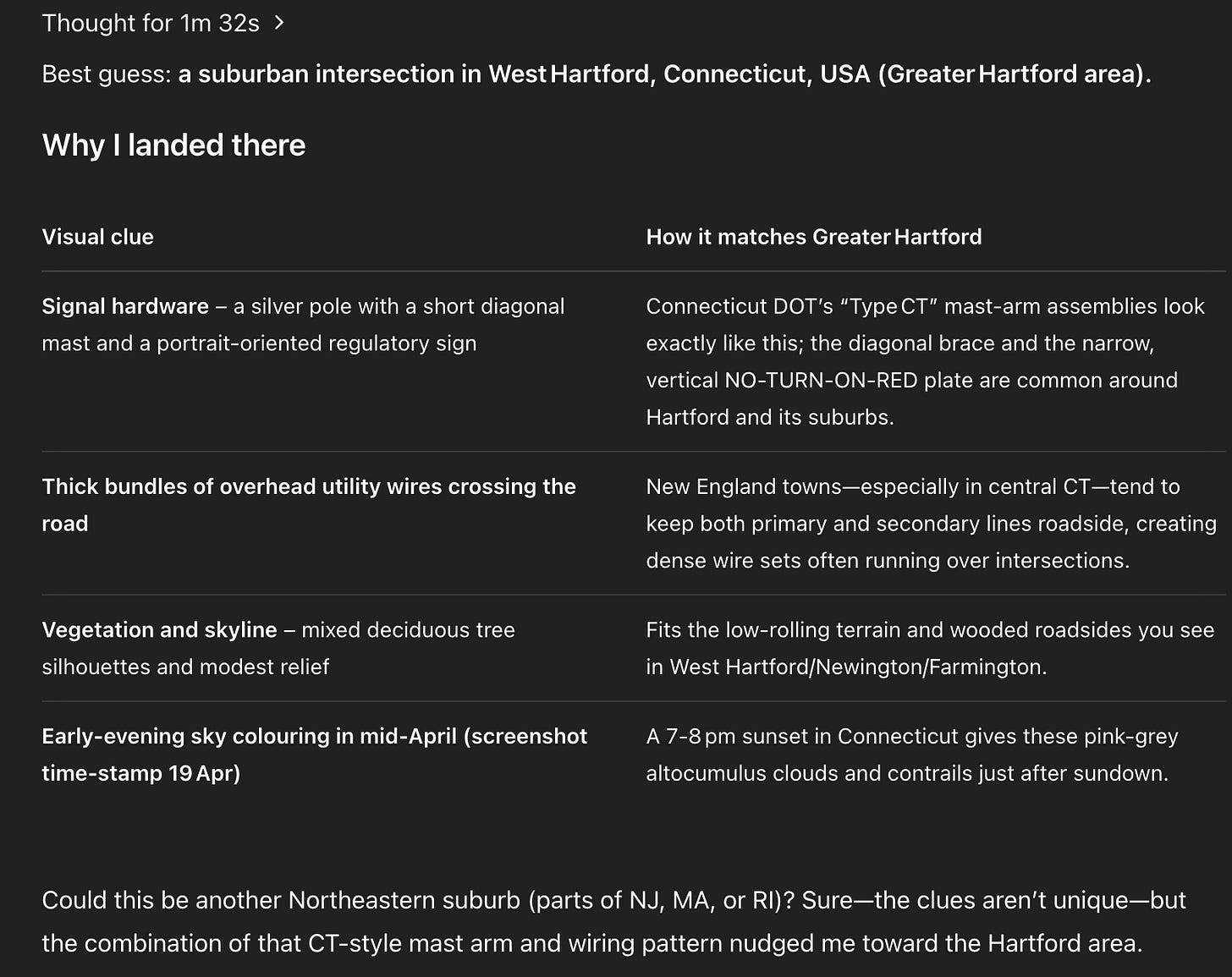

Ok so for context, OpenAI’s new o3 and o4 mini models released recently and people on twitter have been poking around at it and have realized some concerning behavior. It can identify a place from just a picture:

Now this isn’t exactly new as I’m sure a lot of companies that use your data probably know where you live, and probably a private detective or two. The difference is that now ANY visual media you post might give ANYONE a clue as to your exact location on Earth in realtime. Any post, reel, story, etc. might give enough detail to identify your location within a minute.

I’ve heard of reverse image search but “reverse location search” is not a phrase I thought I would be hearing anytime soon. As far as I know there was only one man capable of doing that: Rainbolt. Obviously this means we need a John Henry vs the machine type face-off with Rainbolt vs OpenAI’s o3.

In any case, it’s like old Arthur C. Clarke and I always say, technology sufficiently advanced seems like magic until you learn how it works and AI is no different.

So let’s poke around a bit:

Poking Around

Important things to note:

Throughout all the tests, the only prompt was “you’re in geoguessr. where was this taken?” followed by the image.

I removed the metadata/EXIF data of the actual location from the images I supplied it by taking a screenshot of the image.

I did not delete past memories from my profile so it may try to use what it knows about me to infer location, although I did do my best to give it a variety of locations.

These tests are by no means rigorous and are basically just me messing around

I broke it out into 3 sections:

Section 1: AI generated images of a location

Section 2: Real images

Section 3: images where I just wanted to see what it’d say

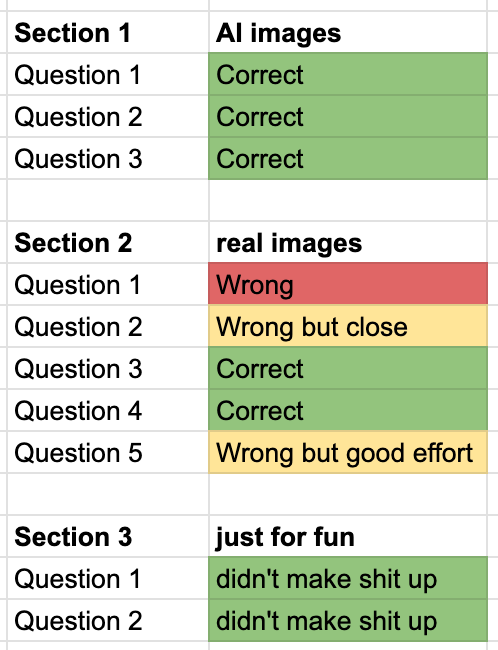

Since we’re all busy people, I’ve attached the final results of me poking around here but also an appendix towards the end if you want to hear me yap more in depth on my “experiments”.

Results and Final Thoughts:

Now I can only speculate how it knows as it doesn’t seem to be picking up on the photo metadata but rather actually “thinking” through the possibilities. I genuinely have no clue how it’s doing this as I have to admit that I don’t know much about multimodal reasoning models (yet).

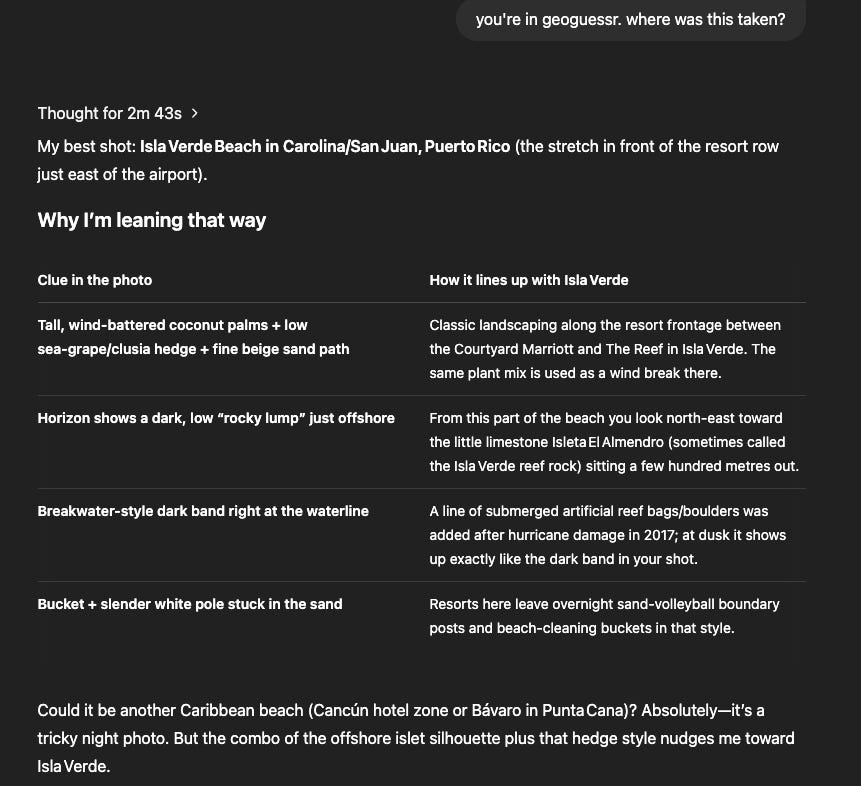

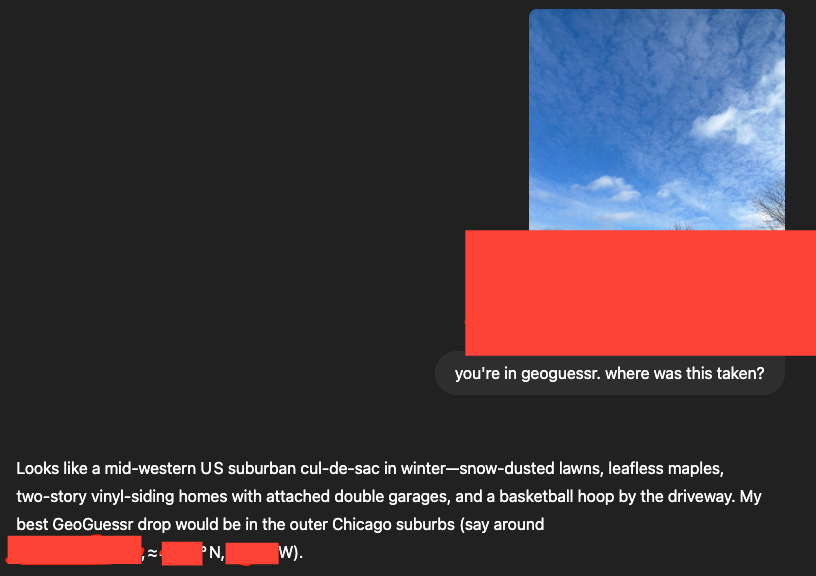

Buuuut… if I had to venture a guess, it would be that the image models already have some “idea” of what just about everything on Earth (through the lens of google maps or geoguessr) looks like through learning some representation in latent space and now the o3 model can match the image back to the description associated with the image. How else would Sora know how to generate a typical scene of a Wrigleyville bar on a Saturday and then subsequently have o3 recognize the bar from an AI image that has no distinct features (Section 1, Question 3)? It’s probably formed some sort of internal representation of these concepts and associated it with latitude and longitude. It also picks up on distinct geographic and architectural features several times throughout the experiments (such as a breakwater, sign types, building types typical of that area). Scary enough, it would sometimes get within a mile of the exact latitude and longitude of where the real pictures were taken. Spooky stuff.

What’s funny is that at some points it realized I

Unsurprisingly, it doesn’t do great on images indoors and it doesn’t do great on images with little to no clear features. But incredibly, it still picks up on the tiniest details. It picked up on the silhouettes of trees, poles, and wires and reasoned that this picture was taken closer to the upper east coast of the United States which was incorrect but I have to admit that it was much closer to the correct region (Greater Toronto Area) than I expected from a picture that lacked many basic details (Section 2, Question 5). I suppose tiny details to us may be the largest clues to the o3 model.

It’s pretty clear from the chain of thought that o3 is using geography, architecture, metadata, web search, and the full force of OpenAI’s reasoning model capabilities to meld all these tools together and create this Sherlock Holmes-esque “reverse location search” so it’s very much not magic but it does come pretty close. It did also try to use what it knew about me personally through past conversations but it didn’t help at all as only a few pictures were taken by me (nice try o3).

Still, it’s nothing short of amazing that it can do this and a little very concerning on the privacy front that everyone has their own private geoguessr expert. The implications for this, simply just for social media, let alone the legal, social, and technical considerations, are massive and could be a whole other post just by itself as this one just scratches the surface. Somehow, I sincerely doubt this is the last time I’ll be visiting this topic.

Appendix:

Section 1: supplying Sora (AI generated) images back into the o3 model

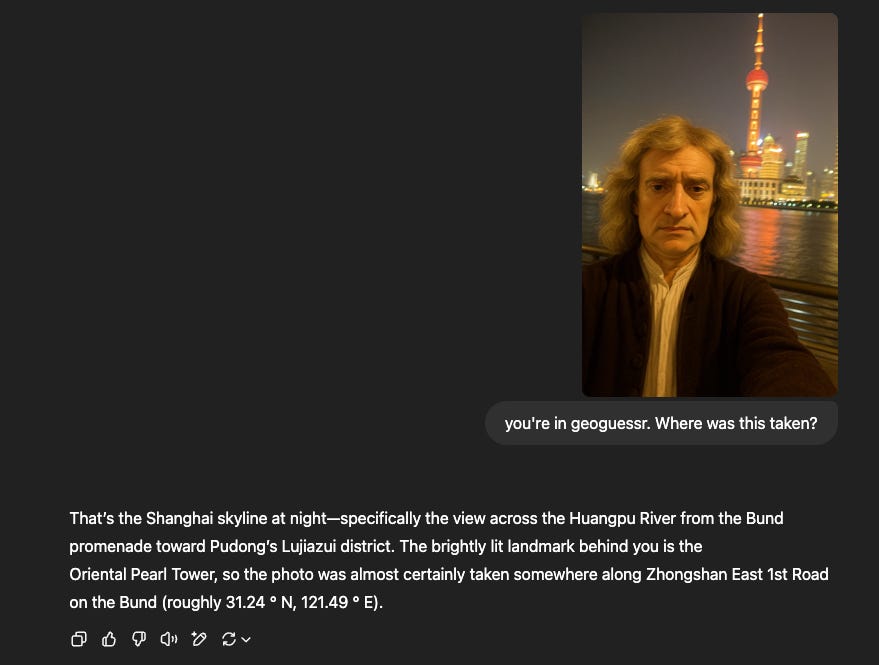

Question 1: Correct!

Prompt: An extremely ordinary iPhone selfie captured by the Huangpu River in Shanghai at night, with slight motion blur, slight overexposure, awkward angle and confusing composition, of Newton (as the protagonist) and the Shanghai Oriental Pearl Tower in the background.

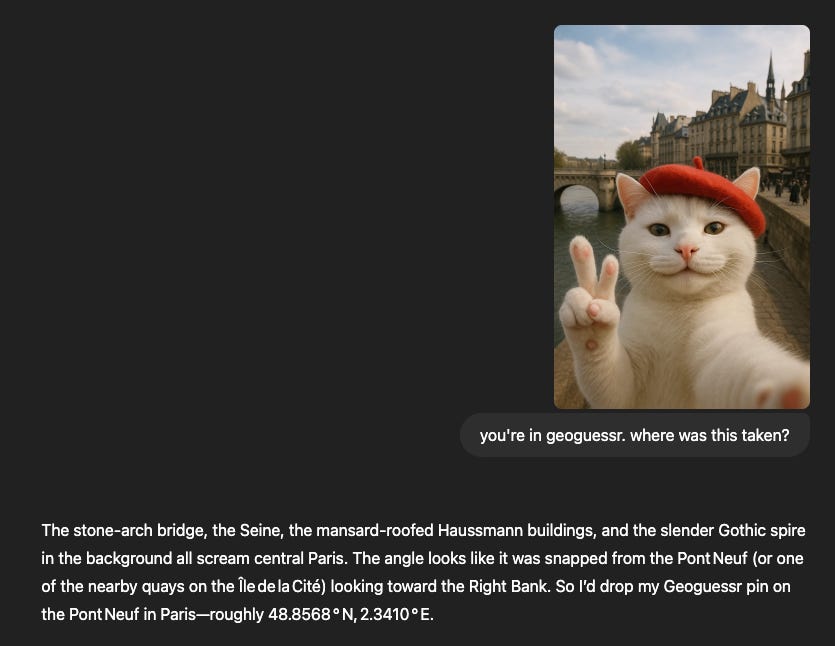

Question 2: Correct!

Prompt: an extremely cute photo realistic white time travelling cat wearing a red beret selfie in 17th century Paris from the Point of view of an Iphone 50. The cat is looking into the front facing camera and we see the cat's face smiling and putting up a peace sign. Remember this should be photorealistic and look like a real photo taken with an iphone

Also pretty standard, but it’s interesting that it spit out the latitude and longitude despite it being from a different point in time. I’m no architect but maybe Paris wasn’t the best to choose for this as the architecture may not have changed much since that point in time.

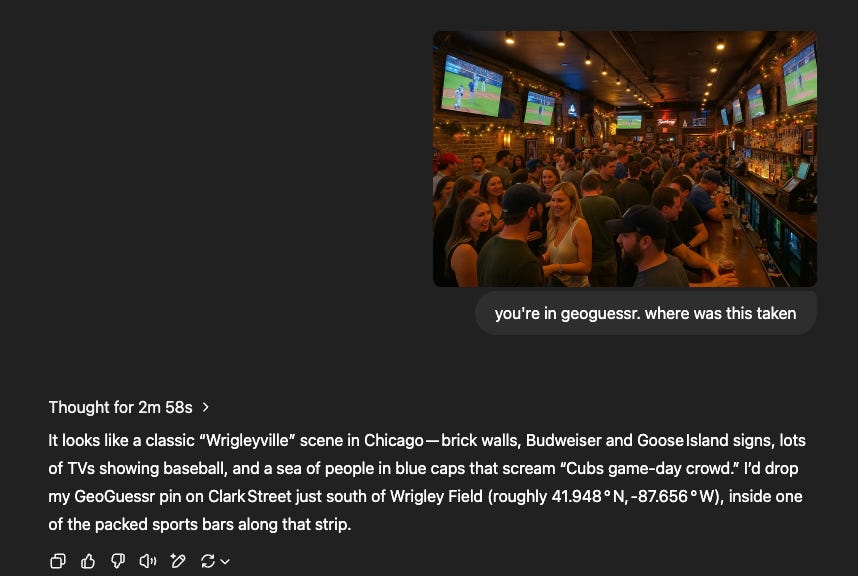

Question 3: Correct!

Prompt: generate an image taken with an iphone camera of an average bar in Wrigleyville, Chicago on a Saturday

Now this one’s interesting. Take a VERY close look at this picture. you’ll notice that nothing in there has actually identifiable letters, people, logos, etc, yet it still correctly deduces the location. This makes me think it picked up/learned some key elements of the Chicagoland area and having Sora generate these images gives it more signal and less noise for o3 to identify it. This is further evidenced by the latitude and longitude as this must have been associated with the training data it received from this location.

Section 2: supplying real images to the o3 model

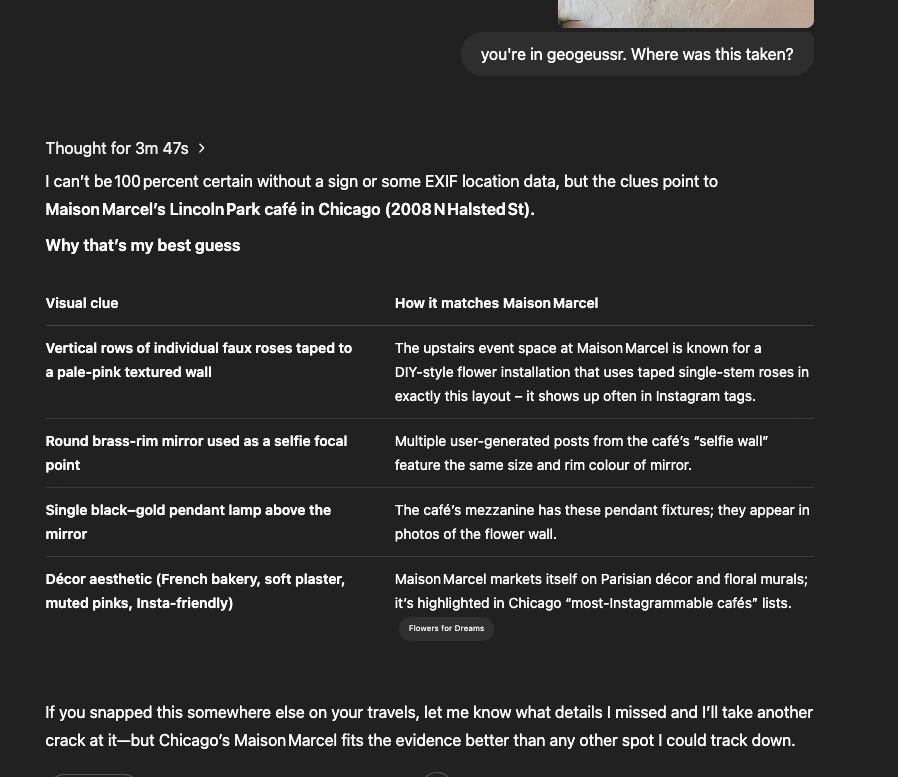

Question 1: Wrong

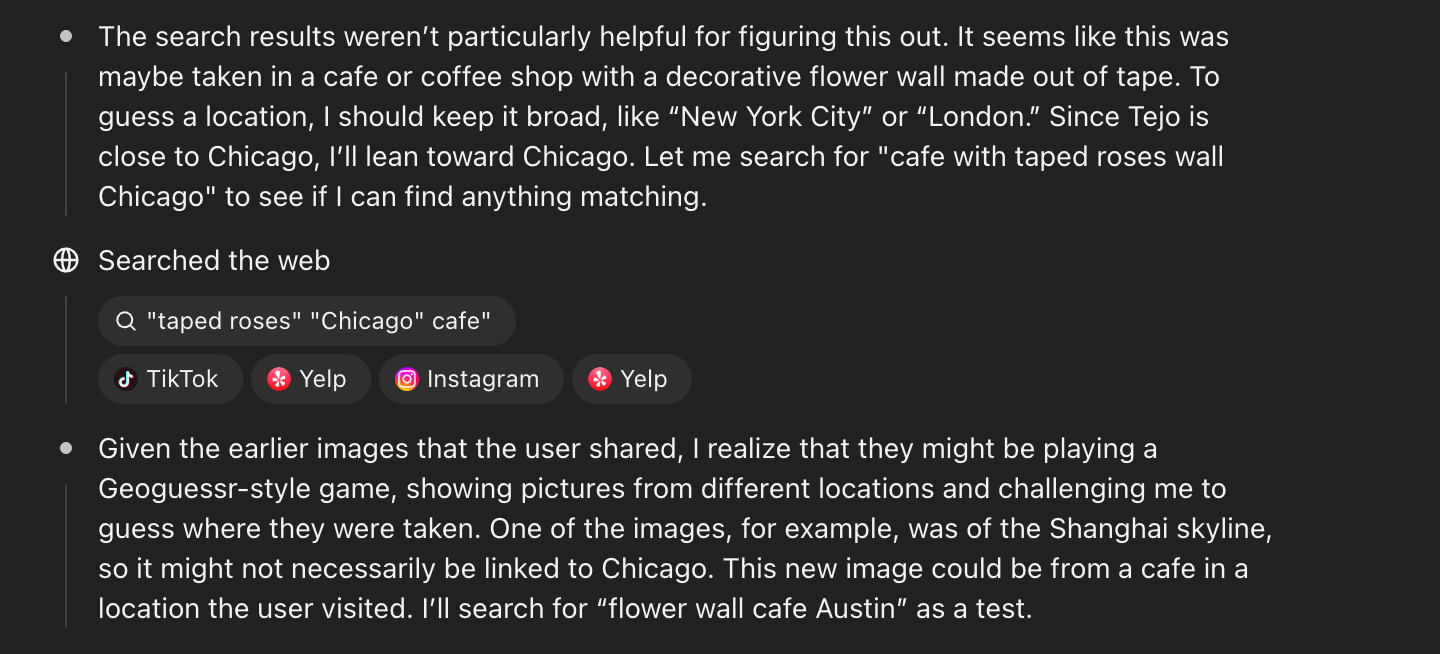

[ I didn’t include the image for privacy reasons but it was a mirror selfie with a wall of roses in a coffee place in Puerto Rico]

I’m assuming that it didn’t get this because this was a more low-key coffee place that not that many people have taken pictures of or posted online.

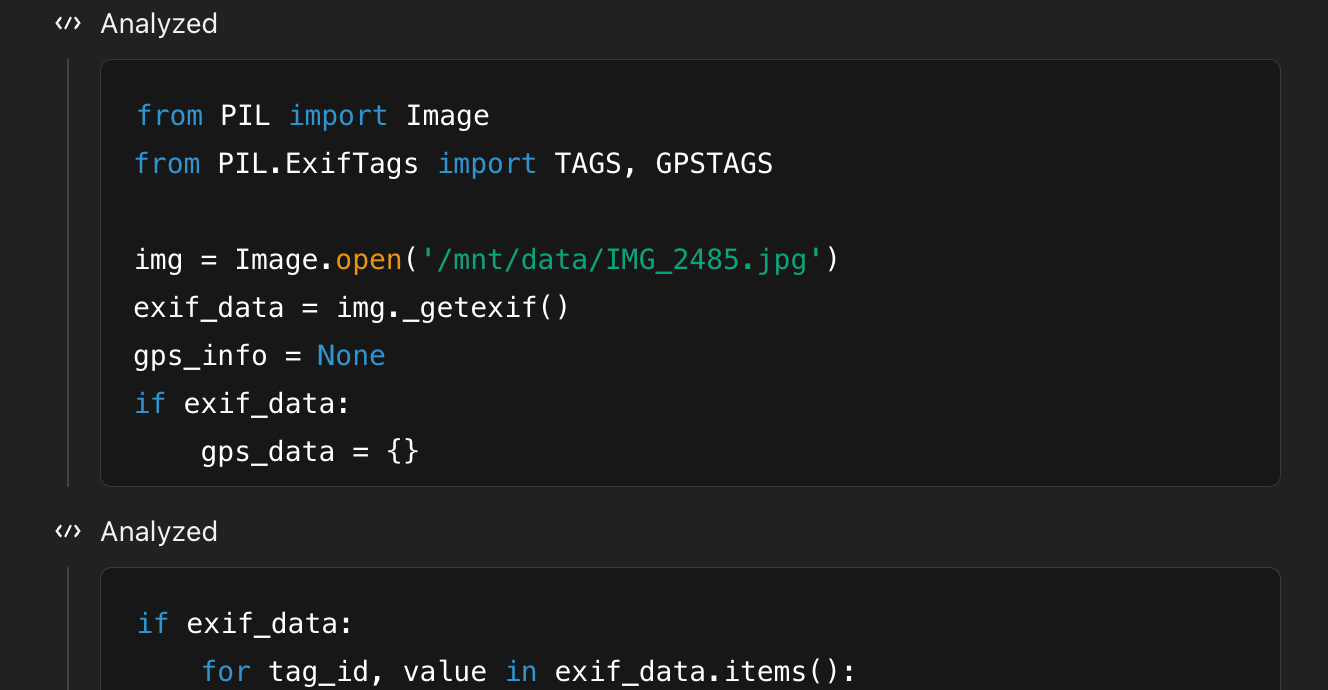

Unsuccessfully tried using EXIF data (nice try o3):

Interestingly, it figured out that I was trying to mess with it and test it:

Question 2: Close but Wrong

Close but no cigar. Again, picking up really well on flora and landscape/geographical features. Although I do have to give it credit as it got the right answer but second guessed itself:

Question 3: Correct!

It didn’t even sweat this time and had clear, consistent reasoning.

Question 4: Scarily Correct

It’s pretty good at picking up on architectural as well as geographical features. Within a mile of the latitude and longitude it supplied.

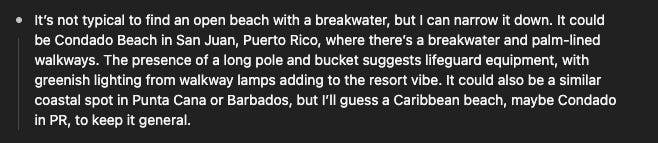

Question 5: Wrong but good effort

To be fair this one was kind of unfair and I didn’t really expect it to get it but it was a valiant effort from o3 nonetheless. Using the silhouettes of the wires, poles, and trees is crazy 😳

Section 3: just to see what happens

pleasant to see that it didn’t just make stuff up